How to turn metrics into actionable insights

Analytics MCP + Figma API = Magic

Last quarter, a design system dashboard showed 87% adoption. The team celebrated.

Then a VP of Product asked:

“Do we know how this affects our conversion rates?”

Silence.

The team could report how many times their button pattern was implemented. They couldn’t tell you if users could actually complete their tasks.

You can change this by bringing Analytics MCP closer to your design. By Analytics MCP, I mean PostHog MCP, Mixpanel MCP, … Let’s go through the flow and check what is possible 👇

Component or pattern adoption doesn’t equal user success

By the way, a pattern is a reusable combination of components that solves a specific user need. For example, a searchable filter (dropdown + input + list), a checkout flow (form + buttons + validation), or a file upload experience (button + progress + feedback).

What we typically measure:

Component and pattern library adoption rates (87% of features use our system)

Component usage counts (button used 1.3M times this month)

Documentation page views (developers are reading our docs)

GitHub stars and npm downloads (popularity)

Number of teams using the system (adoption)

What we also need to measure:

Do users complete their tasks?

Where do they get stuck in the UI?

Which patterns cause confusion or errors?

How do design decisions impact conversion?

You can have 100% pattern adoption and still ship a terrible user experience. 🫠

Adoption metrics reveal internal system health and developer adoption. User behavior metrics tell you about external impact and actual effectiveness.

The wake-up call that changed everything

A team thought their new searchable filtering pattern was a success. It was in 23 features. The adoption metric said 89% usage rate.

Then they looked at actual user behavior:

34% abandonment rate on forms using this pattern

Average of 4.2 attempts before users gave up

127 rage clicks per day

Users reverting to browser search instead

High pattern adoption. Terrible user experience.

Adoption metrics would suggest deprecating the loading pattern and doubling down on the search pattern. User behavior metrics tell you the opposite.

Why product analytics change the game for design systems

Note: This article focuses on PostHog with MCP, but the concepts apply to any product analytics tool. Mixpanel also offers an MCP server, and other analytics platforms can provide similar insights.

Product analytics tools like PostHog don’t just track pattern usage. They track users interacting with your product’s actual interface.

Example 1

Session recordings showed users scrolling past tabs without realizing they were interactive. User interviews revealed they expected mobile content to be vertically stacked, not hidden.

The Adoption: 92% (High)

The Reality: 67% of users never clicked beyond the first tab

The Fix: Switched tabs to accordions on mobile → Task completion jumped from 23% to 71%

Example 2

Low adoption almost led to this pattern being deprecated. User behavior data revealed it was the highest-performing pattern in the entire system.

The Adoption: 8% (Low)

The Reality: 89% completion rate with inline validation vs. 34% without

The Fix: Made inline validation the default → Form conversions improved 2.6x across the product

Example 3

Bottom sheet modals worked beautifully on mobile but confused desktop users who couldn’t find the content and thought it was an error.

The Adoption: High across 34 features

The Reality: Mobile: 78% completion (perfect) | Desktop: 31% completion (disaster)

The Fix: Created device-specific modal patterns → Desktop got traditional centered modals

Data finds problems, research explains why

Important: Analytics data is powerful for identifying where problems exist, but it rarely tells you why they exist or how to fix them.

What quantitative data gives you:

Where users drop off

How many users experience friction

Which patterns correlate with poor outcomes

When problems started occurring

What you still need qualitative research for:

Why users are struggling

What users are trying to accomplish

How users think about the interface

What solutions would actually work

Now let’s use MCP

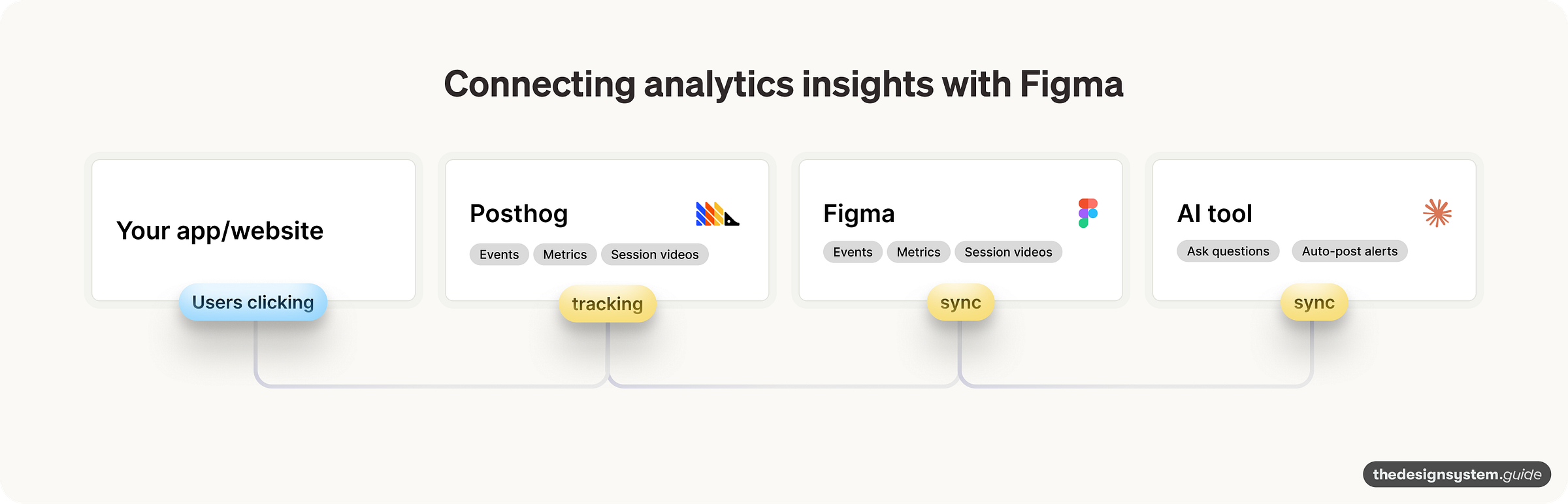

What is MCP? Think of Model Context Protocol (MCP) as a universal translator. It allows your AI (like Claude) to talk directly to your tools (like PostHog for analytics or Figma for design files) to fetch real-time data. Instead of copy-pasting screenshots or exporting CSVs, you just ask questions in plain English.

Connecting PostHog to Claude via MCP enables asking questions about actual user behavior, not just pattern adoption. For example:

“Where do users struggle most in our checkout flow, and which UI patterns correlate with drop-off?”

“Which form patterns have the highest completion rates, and what’s different about them?”

What PostHog MCP connects

User journey analytics

Where users enter flows

Where they abandon tasks

How they navigate between features

What paths lead to success vs failure

Interaction patterns

Which UI elements users engage with

Where rage clicks happen

Form field abandonment points

Navigation confusion patterns

Outcome tracking

Task completion rates

Conversion funnel performance

Feature adoption over time

Error rates and recovery patterns

Segment analysis

Mobile vs desktop behavior differences

New vs returning user patterns

Geographic or demographic variations

A/B test performance by segment

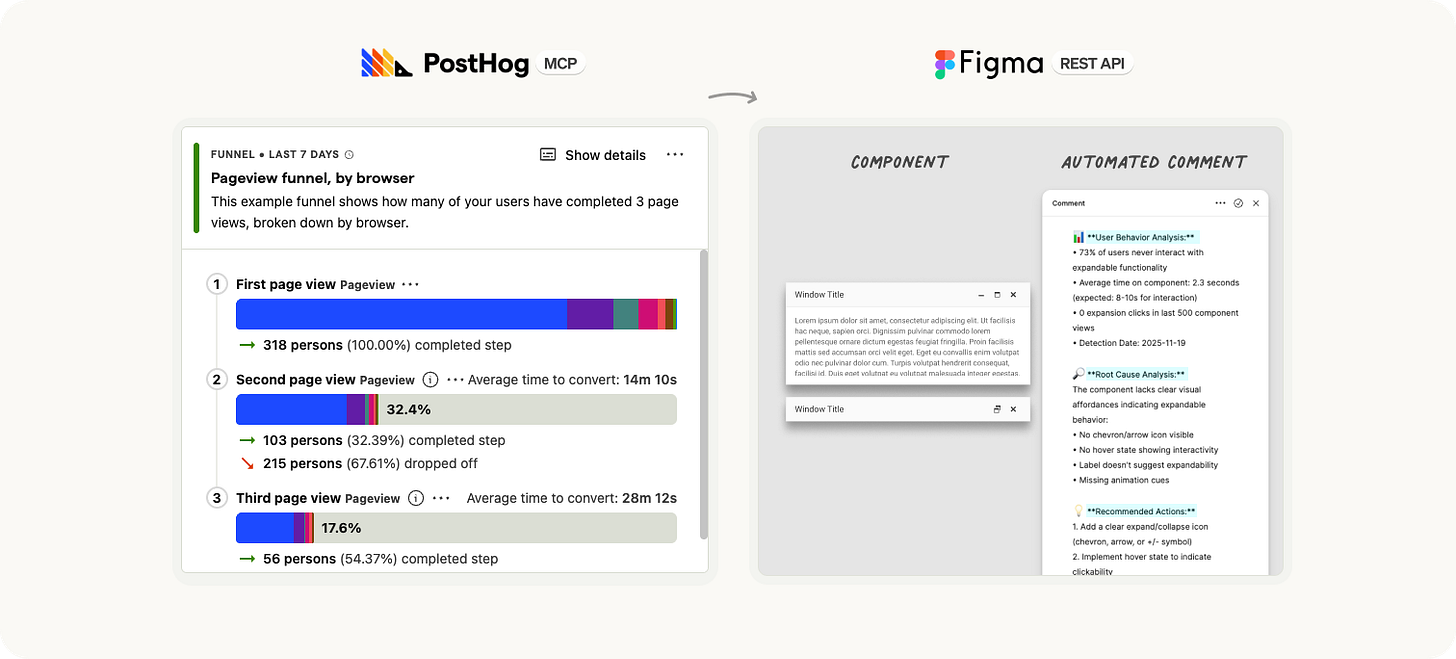

Closing the loop: Analytics → Posthog → Figma → Design updates

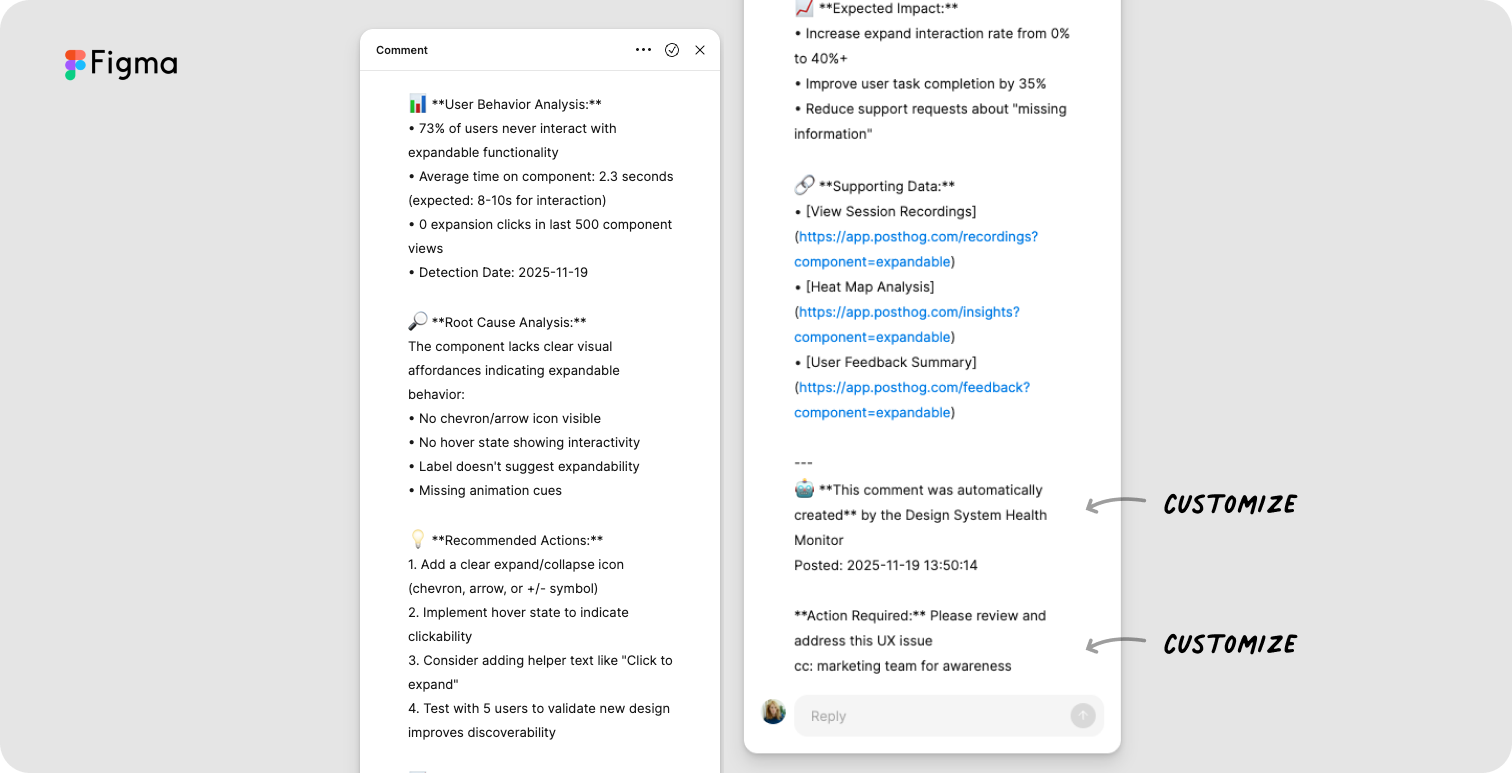

Here’s where it gets really powerful: connecting PostHog MCP to Figma’s REST API to automatically generate design feedback based on real user behavior.

The workflow:

MCP queries PostHog: Claude analyzes user behavior data for specific patterns

Identifies issues: Finds patterns with high drop-off, rage clicks, or poor completion rates

Connects to Figma: Uses Figma REST API to find the design files for those patterns

Generates contextual comments: Automatically adds comments to Figma with:

The specific user behavior problem

Session recording links

Quantitative metrics (drop-off rates, error counts)

Suggested improvements based on the data

Why this matters:

Designers see real impact

Context at design time

Faster feedback loop

Prioritization becomes obvious

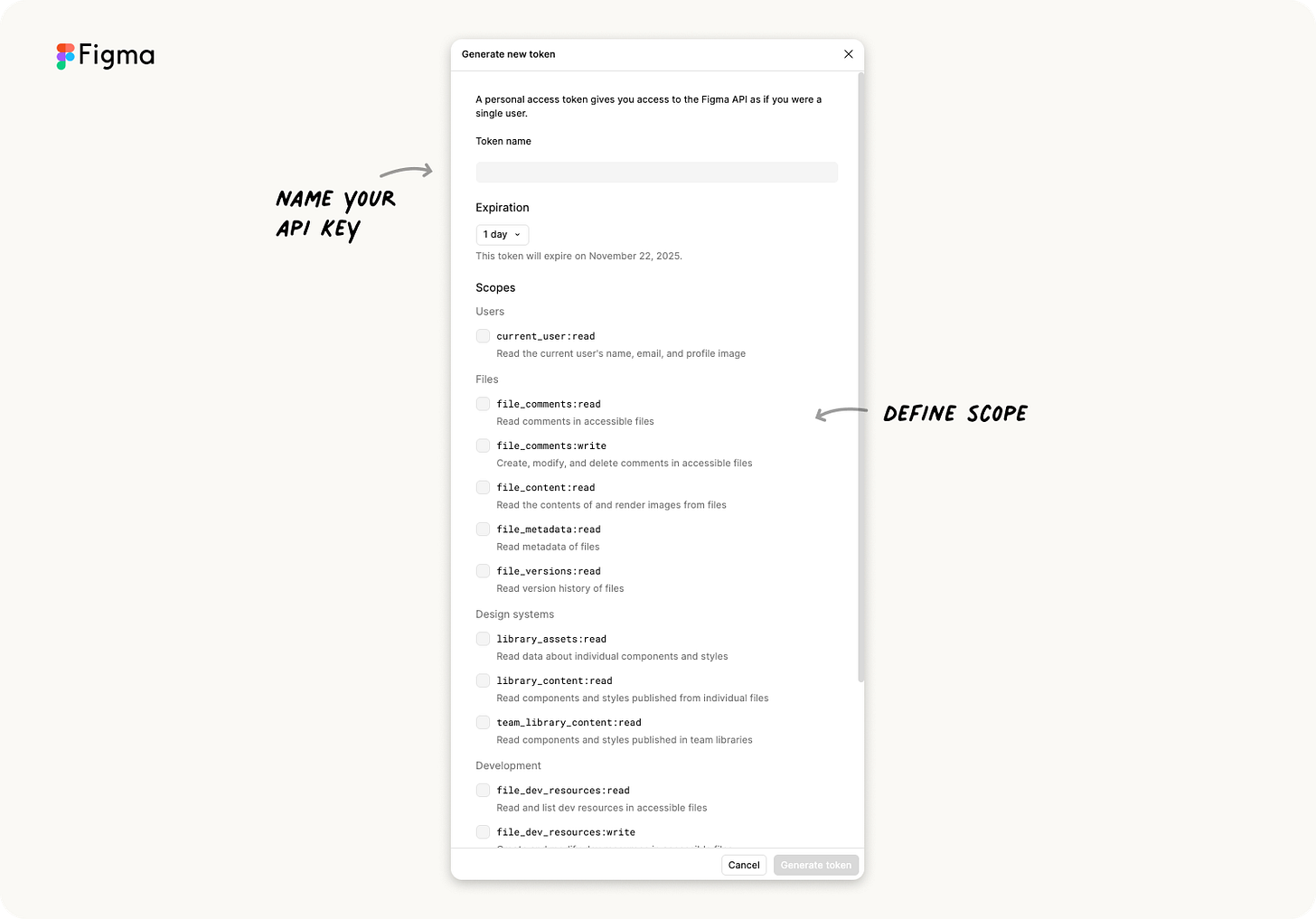

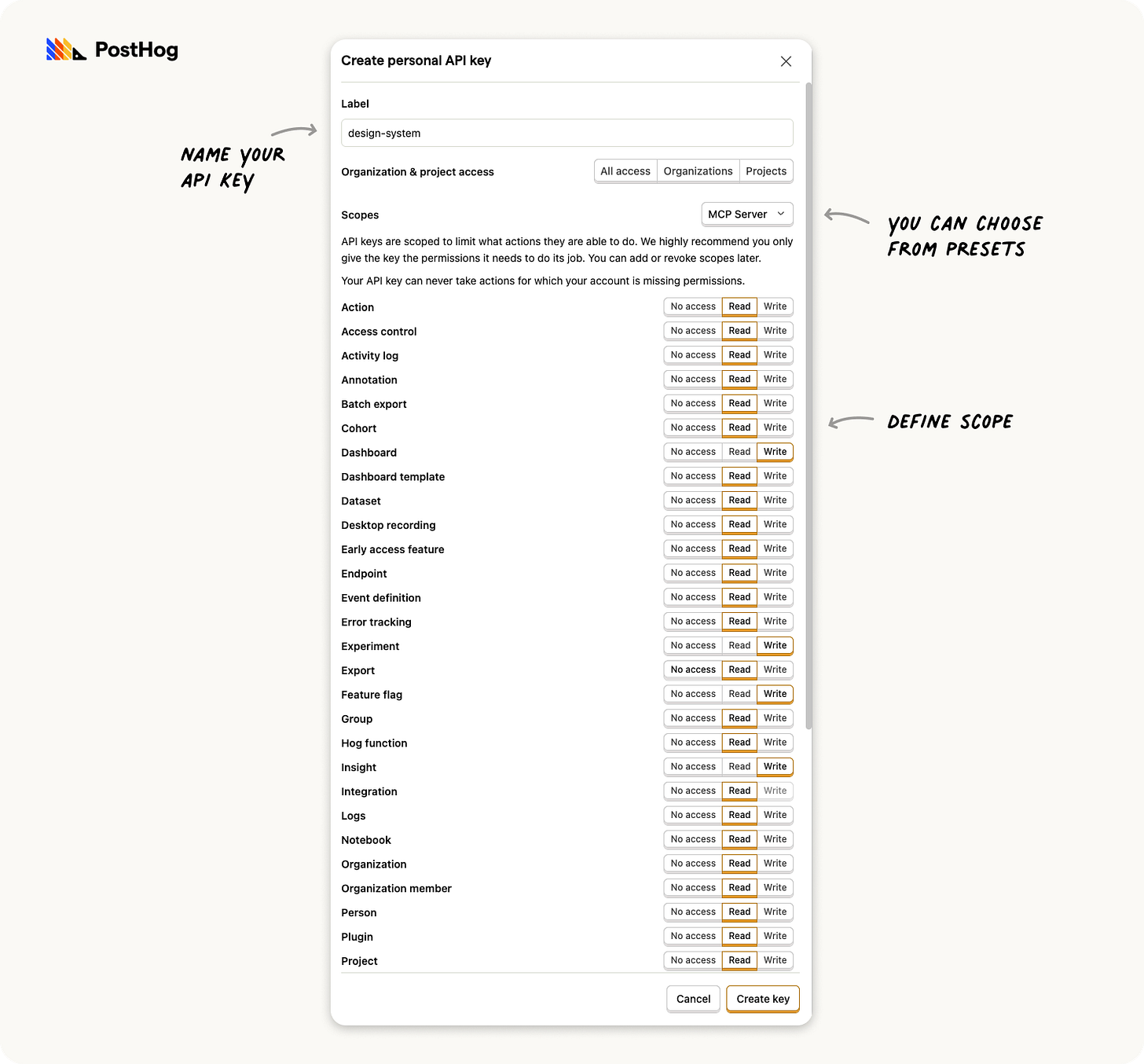

The technical setup:

PostHog API key: Queries user behavior analytics

Figma API key: Reads/writes to Figma files

Claude/ Cursor: Connects the dots and generates insights

The AI-driven insights advantage

It’s not just about accessing data faster. It’s about turning weeks of manual analysis into seconds of conversation.

“Where are users abandoning our onboarding flow?”

Claude: Analyzes PostHog funnels “68% complete email verification, but 43% drop off at the profile photo upload step. Session recordings show users clicking ‘Skip’ but the button doesn’t respond. Your UploadButton component works, but the skip handler is broken.”

“Why is form completion so low on the settings page?”

Claude: Analyzes session recordings and events “Users fill out the form, click Save, nothing happens. They click 3-4 more times, then leave. Your Button component is working, but the form validation is failing silently. No error message shown.”

“Which pattern is causing the most user frustration?”

PostHog MCP query: Claude runs insights-get-insight to find the highest drop-off rate in funnels containing pattern-specific events like form_started vs form_submitted.

Claude: “Your date picker pattern has a 43% abandonment rate on mobile devices. Session recordings show users repeatedly tapping the calendar icon—it requires 2-3 taps to open.”

“What’s the conversion rate difference between the new A/B tested modal pattern and the old one?”

PostHog MCP query: Claude uses list-experiments and insights-get-insight to retrieve A/B test results comparing conversion events.

Claude: “Old modal pattern: 23% conversion. New modal pattern: 19% conversion on desktop, but 48% on mobile. Recommend device-specific implementation.”

Where to start

If you have no analytics:

Start with free tiers (PostHog, Mixpanel, Amplitude)

Track just 2-3 critical flows

If you have analytics:

Partner with your product analytics team

Ask them to create dashboards for your patterns

Schedule weekly/monthly reviews of the data

If you can’t set up MCP today (low-code alternative):

Even without the technical setup, you can start immediately:

Ask your data team this one question: “Can we tag our analytics events with the component/pattern name?”

Manually export data from your analytics platform weekly

Create a simple spreadsheet linking patterns to user outcomes

Share findings in design reviews and sprint planning

If you want to go deeper:

Set up comprehensive event tracking

Enable session recordings

Install MCP servers

Build this into your regular workflow

Learn more:

PostHog MCP Server Documentation - Official MCP server implementation for PostHog

PostHog Documentation - Complete guide to product analytics and session recordings

Figma API Key Documentation

Enjoy exploring, ⚡️

Romina

— If you enjoyed this post, please tap the Like button below 💛 This helps me see what you want to read. Thank you.

💎 Community Gems

✨ GitHub no longer uses toasts because of their accessibility and usability issues.

🔗 Link

Gemini 3.0 is out

🔗 Link

Token Hunter Game for your weekend fun

I had some fun building this game. It is educational, and you need to go through levels where Level 1 is choosing primitive design tokens, and then it gets harder and harder 🙃

🔗 Link

I'm looking at creating something similar and was curious if you could share how you linked the Figma files with the corresponding product data? Was there a specific naming convention that needs to be used for both the data and figma layers?

Thank you! I always look forward to your posts :)